Our lab’s goal is to understand how animals and people can use the same set of neurons to learn and perform so many different behaviors. To do this, we take three general approaches:

- theory (e.g., normative models: “How should someone perform this task?”)

- machine learning (e.g., artificial agents trained with reinforcement learning)

- neural data analysis (e.g., statistical models of high-dimensional neural activity)

How do neural populations support multiple behaviors?

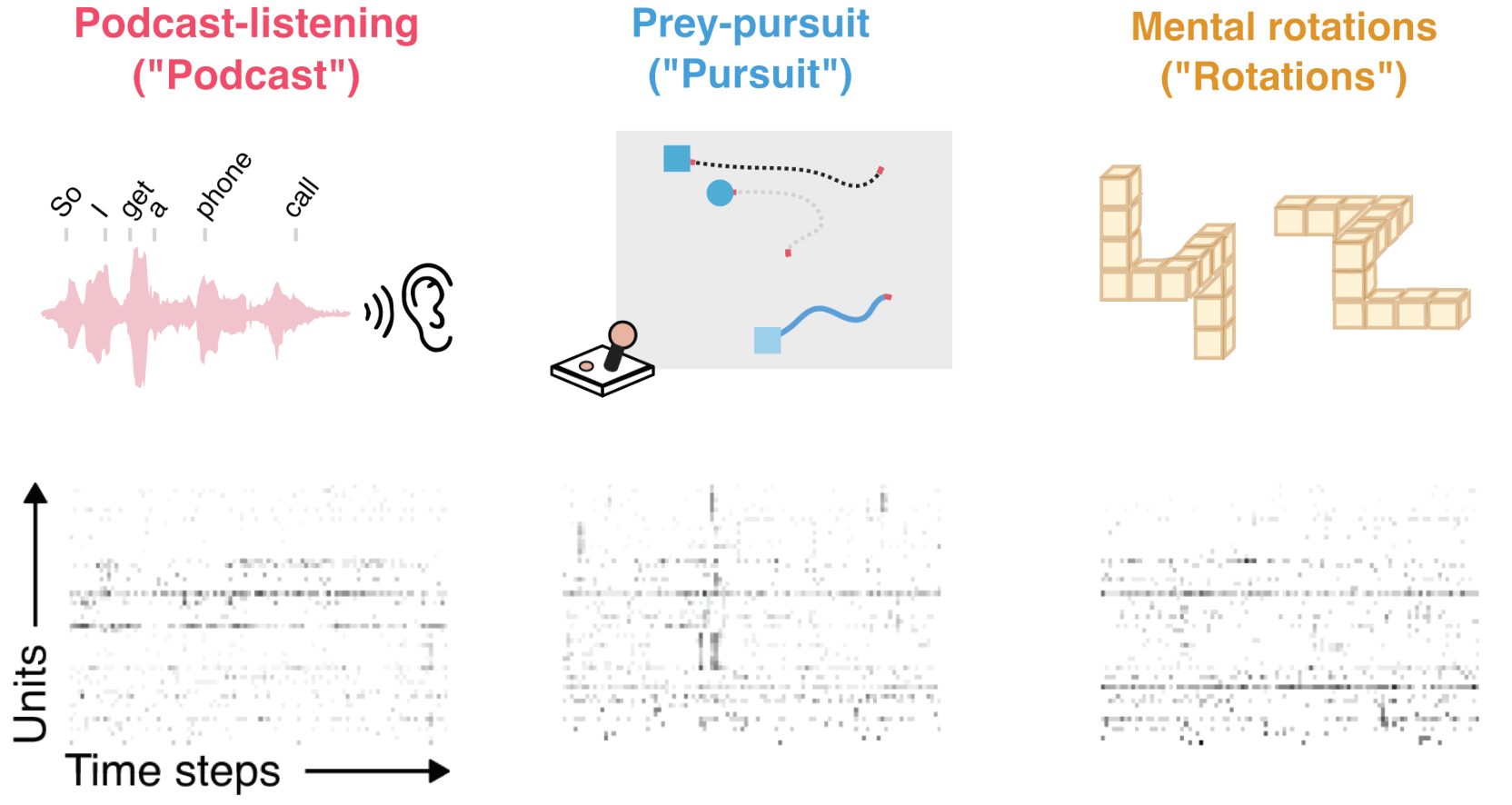

We have human patients perform a variety of different tasks, spanning multiple cognitive, sensory, and motor domains. Tasks include video games like Pacman as well as classic psychophysics tasks.

Alongside this, we conduct 24/7 recordings of spiking activity in human hippocampus and cortex across multiple days.

Some questions we’re interested in:

- Do correlations between neurons remain stable even across unrelated tasks?

- Can we predict a neuron’s activity during a task before the patient has even started the task?

Our lab is in close collaboration with the labs of Sameer Sheth, Ben Hayden, Nicole Provenza, Eleonora Bartoli, and Sarah Heilbronner at Baylor College of Medicine.

How do animals learn from reward?

We are interested in understanding reinforcement learning in the dopamine system, using recurrent neural network (RNN) models, and recordings from neural populations in the prefrontal cortex, amygdala, and basal ganglia.

Some questions we’re interested in:

- What is the neural basis of meta-learning, or “learning to learn”?

- How do neurons develop representations of uncertainty?

- How do animals rapidly learn new reward associations?

You can browse our publications for summaries of our work in these areas.